Credits:

Produced in collaboration with creative agencies - Interbrand, New York

For Client Microsoft

YesYesNo Team:

Zach Lieberman, Molmol Kuo, Adam Carlucci, Dan Moore

CAD | Exhibition design: Marcela Godoy

The Music Box

The Music Box Installation use body and gesture as the musical interface to create an enjoyable environment for play and experimentation, and a public celebration of the joy of creating music.

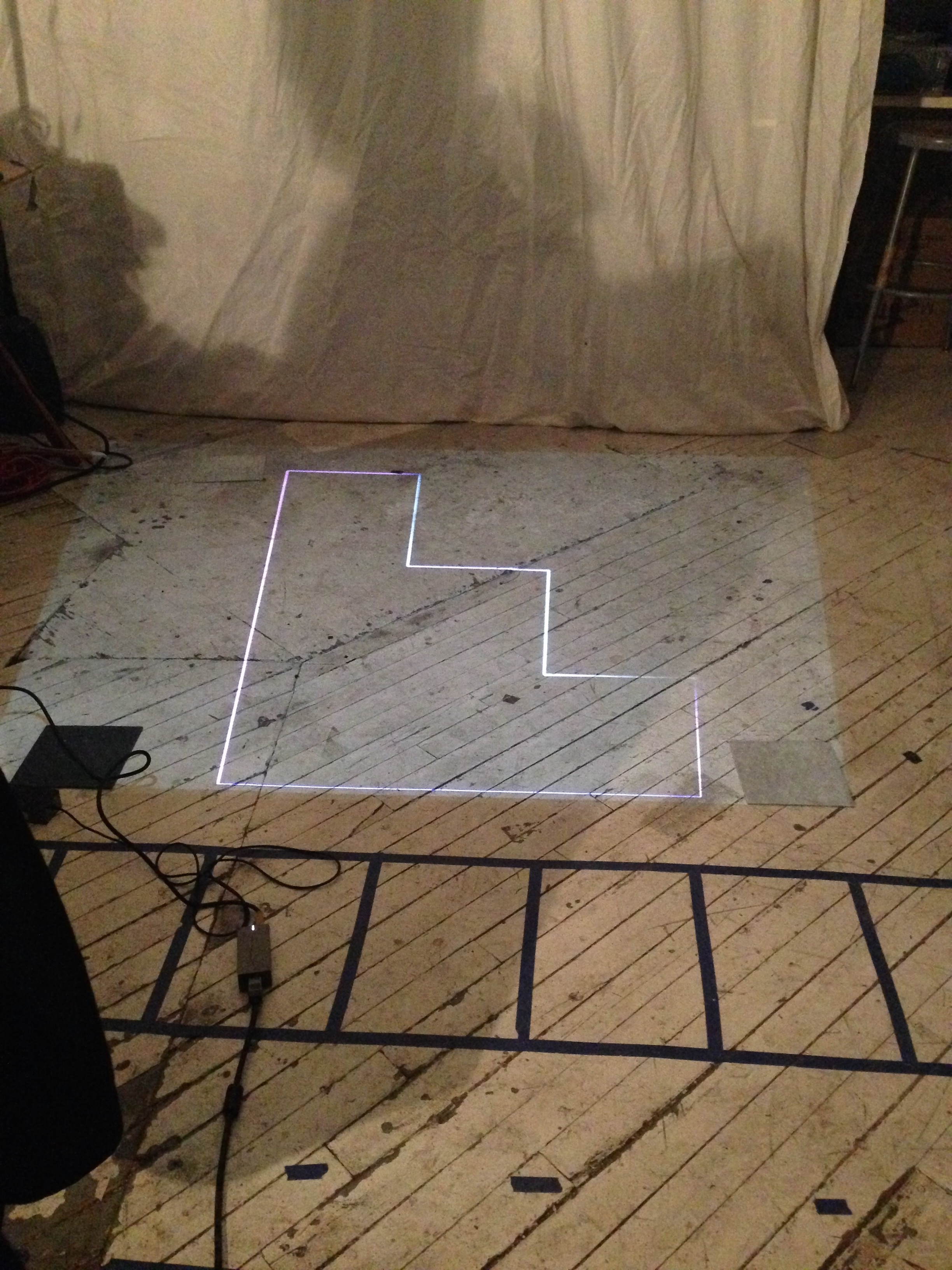

It is a realtime interactive software system which is playable by dancing, moving and jumping. Physically, it is an immersive space consists of a projection mapped floor / stage and a Kinect V2 camera. There can be a single participant or multiple participants on the stage, and they perform the instrument with realtime postures such as punching, kicking, spinning, or use the velocity of the whole body to generate, distort, and to compose music and beats. Kinect camera affords an plethora of data that can be integrated to make the instrument more malleable and interesting to play / jam with. For example, we use foot movement, not only to trigger notes, but also to shape the sound of the note, looking at things like angle, relative position, velocity and knobs. When the fists-shaking can mimic a shaker, and hip-dropping can sound really good from a pair of Subwoofers.

The Music Box installation was launched for the Grammy’s red carpet event.

The software is made with openFrameworks., and Ableton Live for sound design.

Inspirations

We are a fan of Patatap. (http://www.patatap.com/) It is super fun and simple, and particularly good for having different types of sounds that all work well together. During the period of time when we were making of Music Box, we woke up every morning to the music from musicians, Le Tone, with tracks like Joli Dragon to start our day. This style of simple, playful and happy mood had a great effect on how we proceed to design the user interface and choose the sound.

The Software

There is two different modes - a Piano mode, and a percussion mode (we called it the Poet Mode). In each different mode, what you're doing with your body is different. The Kinect also gives a very detailed skeleton and things like overall body orientation and movement and we use these data to drive different filters and sonic effects. The goal is to make something immediately understandable like a traditional instrument but at the same time deeply engaging, fun and reactive. We had started with making a floor piano using just the Kinect. You can play the floor piano (remember Tom Hanks play the floor piano in BIG? ) And later on, we change the stage design to a tangram with video projection.

Some test demo during the process

The stage corner was mapped in the software to turn it into a button -- transitioning between different scenes.